Yes, my first thoughts, as container based databases have been introduced in 12.1 were the same. I found that it is unnecessary at all. With 12.2 I changed my mind (a little bit or even a little bit more) as there are really nice features included, which can be used for free, even on Standard Edition 2.

I don't they, you should or must use these features, but as a Consultant and DBA I always want to help my customers doing things better or to free up the work life from boring stuff.

And what is more boring than staying in the office or working from home at the night or at a weekend when you could have time to play with your kids, make a trip to a cool event or meet some friends?

So if you can't live without spending times outside of your office hours with work, don't read this article further. 😏

For the DOAG I have created a live demo scenario on my notebook (with a Linux VM), so I would like to share not only my knowledge, but also part of my scripts, so that you can test everything on your own.

What you do need to use the scripts as they are, so you can copy paste them is not that much.

Preparation:

- 2 Container databases XFEE1 and XFEE2 (XF is my naming convention on test systems for XFS file systems, AC for ACFS and AS for ASM, EE means Enterprise Edition, SE means Standard Edition - the tests do work also with Standard Edition Databases and on ACFS/ZFS without any changes).

- 1 Pluggable database EE1PDB1 inside of XFEE1

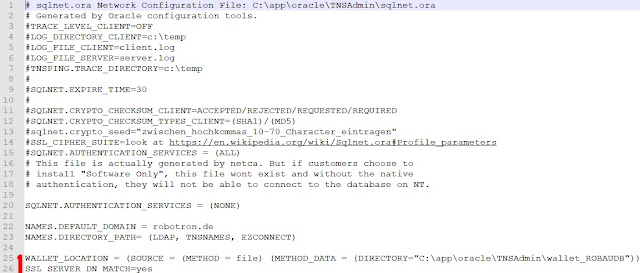

- TNSNames.ora entries to reach XFEE1, XFEE2, EE1PDB1 and EE2PDB1 (EE2PDB1 is later on used for cloned/snapshot cloned PDB1 inside of XFEE2)

- You need to set CLONE_DB true (alter system set clone_db=true scope=spfile;) for non-ACFS/ZFS file systems. Restart the database after setting it.

- To not violate any license rules you can also set MAX_PDBS to one (alter system set max_pdbs=1 scope=both;)

- Set all passwords to "oracle" (or change the scripts)

Some people do think about using the refreshable remote copy of a pdb as a poor men's standby database, but the best use case I can think of is setting up test environments (for applications or even for database updates) from your production. Another use case is to create a reporting PDB which is automatically refreshed ones a day because you can open the refreshable remote copy read only (and as long as it is opened read only no refresh happen).

Think about the following case: You do have your production database up and running and you should create a test environment. The business guys tell you, that they need the test environment in 3 days and the data should be the one after business hours or more horibble at saturday night.

What is your way to do this? Creating a standby database with Data Guard (not for SE2) and stop recover at the evening you should do? So you can prepare things and don't need to work a lot that evening? Or do you prepare an empty database and wait for that evening, then run an export on the production and an import at the test environment? How long does this take and how many hours do you spend that evening doing all this work?

A quick, fast solution is to create a refreshable remote copy of a pdb. All you need to do is to set it up the days before "that evening" comes, you let it refresh automatically all minutes and all you need to do at "that evening" is to stop the refresh and open the database. Maybe, due to application reasons, you need to run a SQL script which is always needed (also with Standby or with export/import). If you have tested that once or twice, you could even create a cronjob that does a last refresh and opens the database / run your application script when it is time to. So you don't need to work at the evening or weekend at all. 😊

If you want to test that, you need the things I have mentioned at the preparation part of this post. And then you can just run the following scripts.

The steps in the script are the following (you don't need to create the common user at the receiving database, I have just re-used the picture):

- Create a common user and grant him rights

- Create a database link to the PDB you want to clone from the CDB$ROOT you want the PDB cloned to.

- Create the refreshable PDB clone and let it run until you need it

- Open the automatically refreshed PDB read-write

Script 2 to run at the CDB$ROOT where you want the PDB cloned to.

Maybe you try to refresh or set the refresh mode to none (see script 2) and you get an

ORA-65118: operation affecting a pluggable database cannot be performed from another pluggable database

If so, just set the container to the pdb ( alter session set container=EE2PDB1; ) and try again.

Hope that will help you at your daily work. Stay tuned, there are more use cases where it is really nice to use PDBs instead of doing it "the old way". I will write a new post as soon as I have time to.

As always, comments are welcome.

You can find the second post regarding remote snapshot clone here.